I recently wrote about 20 terms every self-respecting futurist should know, but now it's time to turn our attention to the opposite. Here are 10 pseudofuturist catchphrases and concepts that need to be eliminated from your vocabulary.

Top image: Screen grab from Elysium.

1. "Transcendence"

Some futurists toss this word around in a way that's not too far removed from its religious roots. The hope is that our technologies can help us experience our existence beyond normal or physical bounds. Now, it very well may be true that we'll eventually learn how to emulate brains in a computer, but it's an open question as to whether or not we'll be able to transfer consciousness itself. In other words, the future may not for us — it'll be for our copies. So it's doubtful any biological being will ever literally experience the process of transcension (just the illusion of it).

What's more, life in a "transcendent" digitized realm, while full of incredible potential, will be no walk in the park; full release, or transcendence, is not likely an achievable goal. Emulated minds, or ems, will be prone to hacking, deletion, unauthorized copying, and subsistence wages. Indeed, a so-called uploaded mind may be free from its corporeal form, but it won't be free from economic and physical realities, including the safety and reliability of the supercomputer running the ems, and the costs involved in procuring sufficient processing power and storage space.

2. "The Singularity"

Vernor Vinge co-opted this term from cosmology as a way to describe a blind spot in our predictive thinking, or more specifically our inability to predict what will happen after the advent of greater-than-human machine intelligence. But since that time, the Technological Singularity has degenerated to a term void of any true meaning.

In addition to its quasi-religious connotations, it has become a veritable Rorschach Test for futurists. The Singularity has been used to describe accelerating change or a future time when progress in technology occurs almost instantly. It has also be used to describe humanity's transition into a posthuman condition, mind uploads, and the advent of a utopian era. Because of all the baggage this term has accumulated, and because the peril that awaits us coming clearer into focus (e.g. the Intelligence Explosion), it's a term that needs to be put to bed, replaced by more substantive and unambiguous hypotheses.

3."Technology Will Save the Future"

I wholeheartedly agree that we should use technology to build the kind of future we want for ourselves and our descendants. Absolutely. But it's important for us to acknowledge the challenges we're sure to face in trying to do so and the unintended consequences of our efforts.

Technology is a double-edged sword that's constantly putting us on the defensive. Our inventions often produce outcomes that need to be provisioned for. Guns have produced the need for gun control and bulletproof vests. Software has produced the need for antivirus programs and firewalls. Industrialization has resulted in labour unions, climate change, and the demand for geoengineering efforts. Airplanes have been co-opted as terrorist weapons. And on and on and on.

The evolution of our technologies could result in a future in which our planet is wrecked and depleted, our privacy gone, our civil liberties severely curtailed, and our political, social and economic structures severely altered. So while we should still strive to create the future, we must remember that we're going to have to adapt to this future.

4. "Will"

We often speak about things that will happen in the future as if there's a certain inevitability to it, or as if we're masters of our own destinies. Trouble is, different people have different visions of the future depending on their needs, values, and place of privilege; there will always be a tension arising from competing interests. What's more, we will undoubtedly hit some intractable technological and economic barriers along the way, not to mention some black swans (unexpected events) and mules (unexpected events beyond our current understanding of how the world works).

Another perspective comes from Jayar LaFontaine, a Foresight Strategist with Idea Couture. He told me,

The word "will" is wildly overused by futurists. It's small and innocuous, so it can be slipped into speech to create a sense of authority which is almost always inappropriate. More often than not, it indicates a futurist's personal biases on a subject rather than any serious assessment of certainty. And it can shut down fruitful conversations about the future, which for me is the whole point.

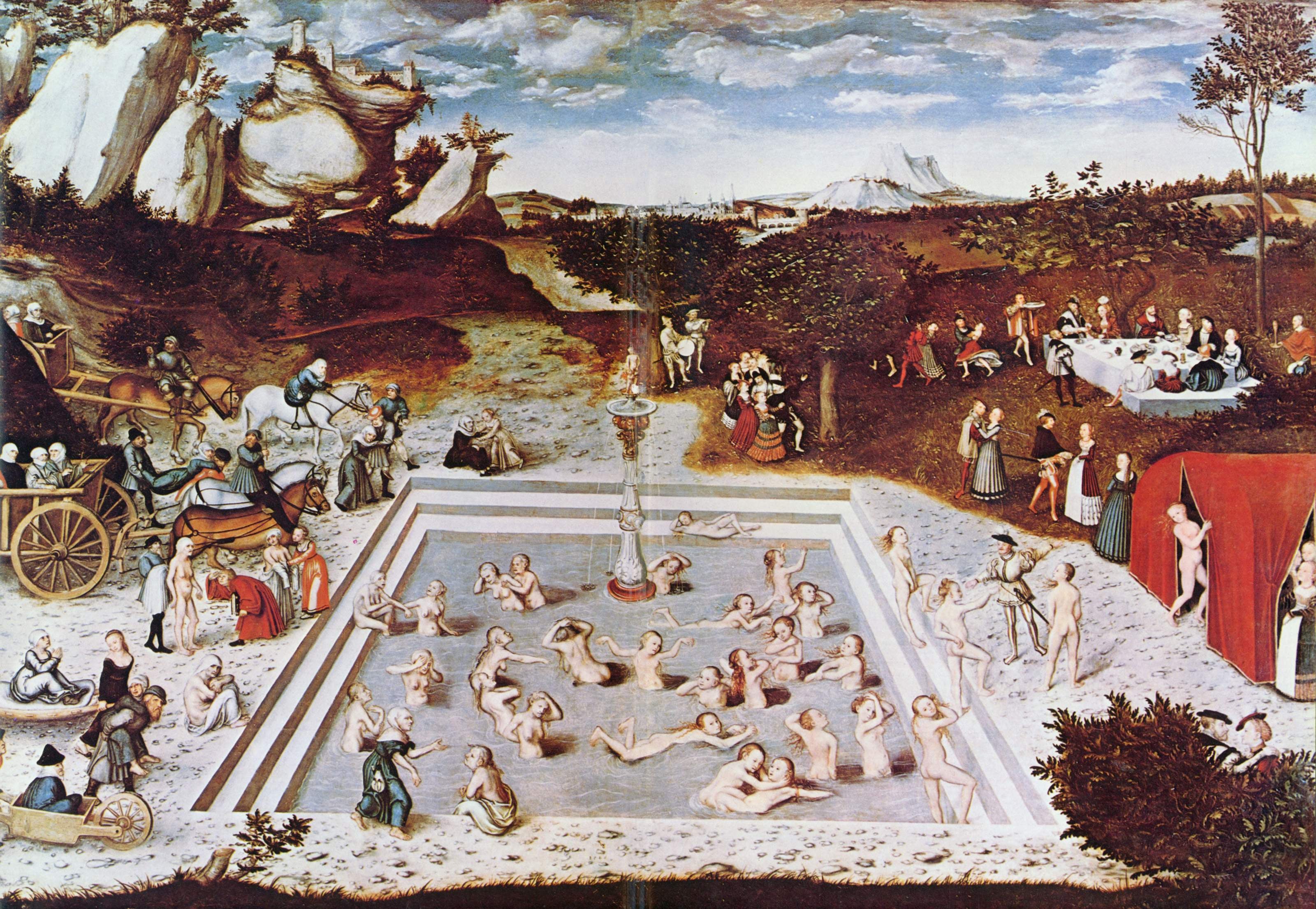

5. "Immortality"

Some folks in the radical life extension and transhumanist communities like to talk about achieving "immortality." Indeed, there's a very good chance that future humans will eventually enter into a state of so-called negligible senescence (the cessation of aging) — a remarkable development that will likely come about through the convergence of several tech sectors, including biotechnology, cybernetics, neuroscience, molecular nanotechnology, and others. But it's a prospect that has been taken just a bit too far.

The Fountain of Youth, 1546 painting by Lucas Cranach the Elder.

First, accidental or unavoidable deaths (like getting hit by a streetcar, being murdered, or inadvertently flying a spacecraft into a supernova) will always be a part of the human — or posthuman — condition. Indeed, the longer we live, the greater chance we have of getting killed in one way or another. Second, the universe is a finite thing — which means our existence is finite, too. That could mean an ultimate fate decided by the heat death of the universe, the Big Crunch, or the Big Rip. And contrary to the thinking of Frank Tipler, there's no loop hole — not even a life-resurrecting Omega Point.

6. "Disruptive"

Virtually every gadget that comes out of Silicon Valley these days is heralded as being disruptive. I don't think this word means what these companies think it means.

Honestly, for a technology to be truly disruptive it has to shake the foundations of society. Looking back through history, it's safe to say that the telegraph, trains, automobiles, and the Internet were truly disruptive. Looking ahead, it'll be various developments in molecular assembly, the social and economic consequences of mass automation, and the proliferation of AI and AGI.

7. "Future Shock"

This is a term that's getting old fast.

Sure, such a thing may have existed in the early 1970s when Alvin Toffler first came up with the idea (though I doubt it), but does anyone truly suffer from "future shock"? Toffler described it as "shattering stress and disorientation" caused by "too much change in too short a period of time," but I don't recall seeing it in the latest edition of the DSM-V.

No doubt, many folks in our society rail against change — like resistance to gay marriage or universal healthcare — but it would be inaccurate and unfair to refer to them as being in a state of shock. Reactionary, maybe.

8. "Moore's Law"

Nope, not a law. At best it's a consistent empirical regularity — and a fairly obvious one at that. Yes, processing speed is getting faster and faster. But why fetishize it by calling it a law? There are other similar observable regularities, including steady advancements in software, telecommunications, materials miniaturization, and even biotechnology. An in fact, mathematical "laws" can predict industrial growth and productivity in many sectors. What's more, Moore's Law is a poor barometer of progress (something it's often used for), particularly social and economic progress.

9. "The Robot Apocalypse"

Let's assume for a moment that an artificial superintelligence eventually emerges and it decides to destroy all humans (a huge stretch given that it's more likely to do this by accident or because it's indifferent). Because AI is often conflated with robotics, many people say the ensuing onslaught is likely to arrive in the form of marauding machines — the so-called robopocalypse.

Okay, sure, that's certainly one way a maniacal ASI could do it, but it's hardly the most efficient. A more likely scenario would involve the destruction of the atmosphere or terrestrial surface with some kind of nanophage. Or, it could infect the entire population with a deadly virus. Alternately, it could poison all water and the food supply. Or something unforeseen — it doesn't matter. The point is that it doesn't need to go to such clunky lengths to destroy us should it choose to do so.

10. "The End Of Humanity"

This one really bugs me. It's both misanthropic and an inaccurate depiction of the future. Some people have gotten it into their heads that the advent of the next era of human evolution necessarily implies the end of humanity. This is unlikely. Not only will biological, unmodified humans exist in the far future, they will always reserve the right to stay that way. So-called transhumans and posthumans are likely to exist (whether they be genetically modified, cybernetic, or digital), but they'll always inhabit a world occupied by regular plain old Homo sapiens.

(image: bikeriderlondon/Shutterstock)

No comments:

Post a Comment